What it is

The Brain is an experimental Spiking Neural Network (SNN) application.

SNNs are a simulation of neurons as they exist in nature. This shouldn't be confused with classical Backpropagation Networks, which are used for pattern recognition, OCR and stuff like that.

A Neuron has many inputs called Synapses, and one output called Axon. Many synapses from other neurons are connected to the axon, which builds up a complex network.

Neurons can fire, they begin to Spike. They will fire, when their input at their synapses reaches a certain threshold. When spiking, they will stimulate other connected neurons, that might fire when their input is above the threshold, and so on. This behavior of neurons is very well researched, Andrew Huxley got the nobel prize for that in 1963.

Our human brain has about 100 billion neurons, each of them about 10,000 synapses.

This* is the Decade of Artificial Intelligence

Some big research projects are running today, most noticable of course the Human Brain Project in Switzerland.

They plan to build a Zetta Flop computer at the end of the decade sometimes in 2023 *).

When Moore's Law allows us to build such a computer, which is still impossible today.

*) We talk about a computer which is 20.000 times faster than the todays fastest super-computer.

I can't wait that long..

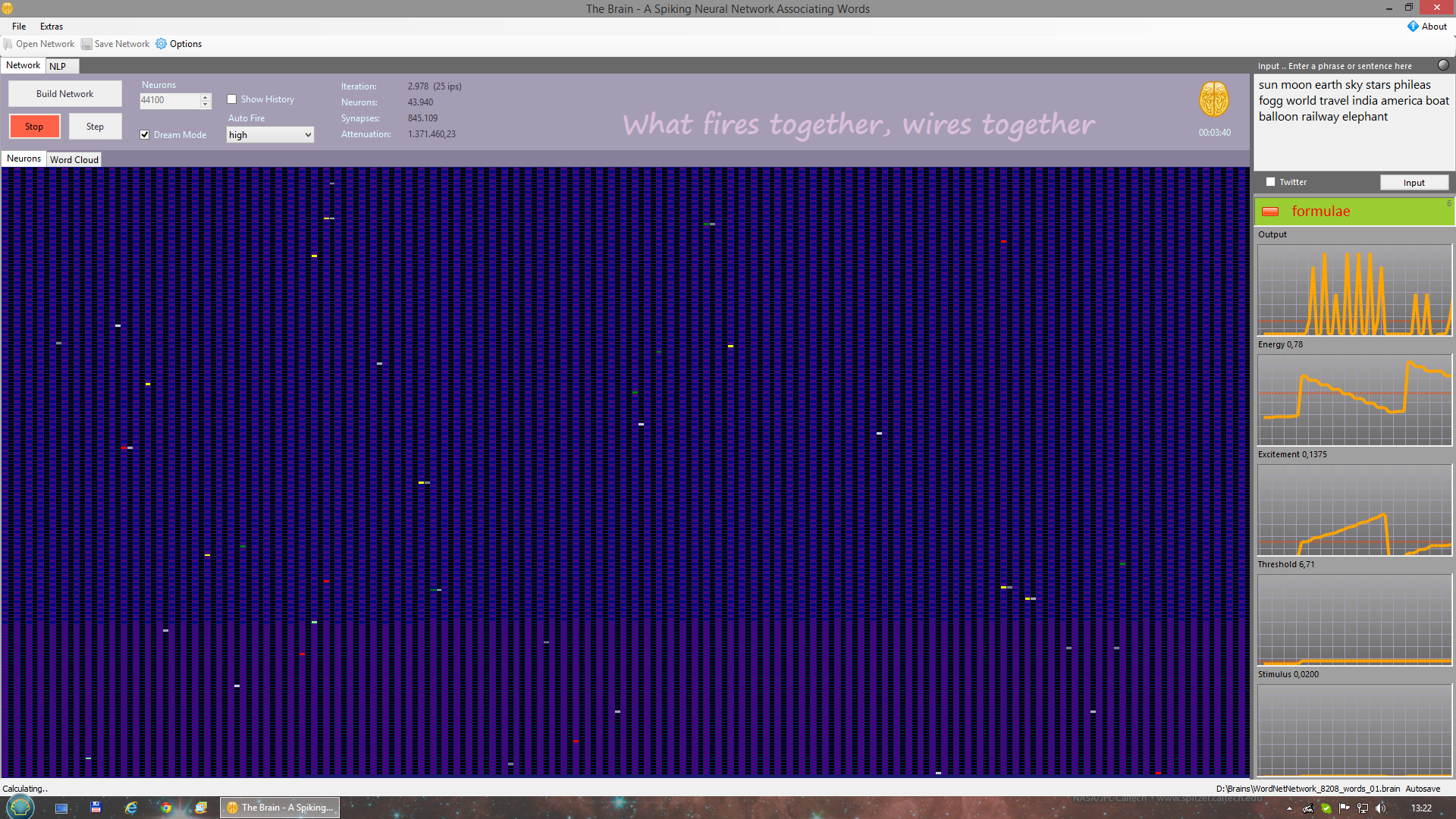

OK, let's get a first glance and see a spiking network in action. Let's see how it looks like, when some ten-thousand neurons spike together, simulated with a simplified neuron model on a standard Personal Computer. This project was started in 2010, first public release 2014.

How this program works

My Brain-Model

My Simplified Neuron Model

In this neuron model, the neuron has 5 curves:

- Output

- Energy

- Threshold

- (short term) Excitement

- (medium term) Stimulus

Edit 2020: Please compare this model with the Intel Lohi-Chip design

https://en.wikichip.org/wiki/intel/loihi#Neuromorphic_Core

OUTPUT is the current output. The timing resolves around 5 different output values (or 'ticks') for one spike.

The neuron has an ENERGY level. It's like in a video game: When you fired too often, energy will be very low and needs some time to regenerate (so this depends on time), before you can fire again. This simple feature (combined with the variable threshold) eliminates many problems, that such a network can have. It prevents the brain from endless loops caused by circular references, and inhibits 'epileptic seizures', as I call it when all neurons fire at the same time.

The THRESHOLD is the lowest stimulation level which lets the neuron fire. This value is self adaptive on the surrounding noise level and it fades in time. When you pinch yourself into your arm, it hurts. When you do it more often, it hurts less every time. When you wait some longer time, it's same as in the beginning.

Excitement and Stimulation are synonyms on this topic. There is one function for the short term and another for the medium term stimulation or excitement.

The short term EXCITEMENT function is logarithmic*: First you need less energy to get an excitement, later you need more and more energy to still get more excited. The excitement affects the output, it's power and the number of spikes (when there is enough energy).

The STIMULUS is raised with each stimulation, and records it by changing very slowly, and so it reflects how much this neuron was involved in a medium term range. This value also stays over night and affects the auto fire rate when dreaming. So that everything, that was stimulated or 'recorded' in the day, can be replayed in sleep, and replayd for several times and in different combinations (which the first impression can't offer), so that it can learn from it, by interconnecting new neurons following the Hebbian learning rule, or strengthen their connections.

I can't see anything else what it takes

to build a good computer brain.

*) Implementation details: The computations for all curves (except output) are simpified by multiplying a new (delta) value with it's former value and a constant factor, or it's reciprocal value, to get logarithmic or quadratic growth.

**) Remarks: Short term means: in minutes. Medium term means: in hours or days. Long term behavior (over years) is not modeled here.

When the program runs, the curves are shown for the most active neuron. In the Network-View, you can right-click on a neuron to see it's curves. You can left-click to let that neuron fire.

Dream Mode

This brain can sleep or awake. Check the checkbox Dream Mode to let it dream.

Auto Fire

In nature, every neuron fires from time to time spontaneously. In this program it does only in Dream-Mode. You can set the auto-fire rate with the drop-down or disable auto-fire.

The Hebbian Learning Rule

WHAT FIRES TOGETHER, WIRES TOGETHER

When two neurons fire at the same time, they will connect or strengthen their connection. Not at the first time, but when they fired several times together. In this program, each neuron tracks a list of best candidates to build new synapses with other neurons, that fired at the same time for a while. The new connection is quite thin and is far below any threshold. But it grows, whenever they fire together again.

The Hebbian Learning Rule and Auto-Fire are only activated in Dream-Mode.

Neurons with negative output

This model adds one neuron with negative output to each exciting neuron and connects both. My considerations lead in this direction, that this is necessary, to distinguish between different patterns. Damping might also be a function of the Glia-Cells in nature, but this is not commonly known.

The Calculation Cycle

In each calculation cycle, called an iteration, the following steps are performed for each neuron:

- Listen (check the inputs from the synapses and calculate all parameters)

- Fire (when the input is above the threshold and when the neuron has enough energy to fire)

- What fires together, wires together (build new synapses or strengthen them, which is displayed as Emphasis)

Other Characteristics

There is no supervising higher entity implemented at all. There is no global data processed in any way, and nothing what controls the overall behavior.

Quite simple: Because it would take a second brain to do so.

And on the small scope, there is no kind of 'intelligence' implemented with each neuron. The neuron itself istn't intelligent. It just reacts in a self-adaptive way. Don't forget: it's a single cell in nature. Which likes to get attracted and which connects with what attracts it.*

*) BTW: same as every existing thing in this universe does. Including but not limited to living things..

Emergence is known as ..

[..] a process whereby larger entities, patterns, and regularities arise through interactions among smaller or simpler entities that themselves do not exhibit such properties.

What you can do with this program

Experiment 1: Build a brain from a book

Natural Language Processing (NLP )

It would be hard to see, what every single neuron does, when you name them by numbers. So my approach is to give them names, that are Words. The words come from books, that you can use for input to construct a new brain. To do so, the program comes with NLP features.

(1) Select the NLP-Tab in the program, paste a book from The Gutenberg Project there, and hit Run Book. The text must be in english language. The program will then add a new neuron for each new word from the book, and initially connects the neurons by their grammar: Subject => Predicate => Object

(2) Then go to the Network-View, enter a sentence or phrase into the text-box and hit Input Text. Many of the neurons will fire up and it looks like the brain collapses. After a while, the brain calms down, because all neurons found their best thresholds.

(3) Then you can enter the Dream-Mode by checking the check-box and the neurons begin to auto-fire and build new connections.

(4) Switch to the Word-Cloud view and see the words for the neurons, that are most active at the moment. When you don't move the mouse, the program changes to full screen mode, and shows an inspiring screen saver while it dreams about the book.

(5) Stop and save the brain. The program has an auto-save feature, which is activated once a brain was saved. Hit run and go on..

When reading a book, the program adds 4-times more additional empty neurons, so that it has a chance to learn.

You can download a sample file here, that has run for 258 hours.

Experiment 2: Use a brain built from most frequent english words, connect the neurons from interflections, then from WordNet synonyms, then from all best literature, and feed it with random input from Twitter

Well, most is already said with the headline 😉

I used the 2+2gfreq list from wordlist.sourceforge.net. The frequency of words is determined from the Google search index. This list has also all inflections for irregular verbs etc., which I have connected in an additional neuron layer.

Then I used WordNet to connect synonyms in an additional neuron layer.

Then some fine literature was run over this brain to add new connections from the grammar:

You can download this initialized Brain here.

You can download this initialized Brain here.

Next you need a Twitter account. Log-In with Twitter, go to the developer's section and create a new application there. You'll get a Customer-Key and a Customer-Secret from Twitter, that you can enter in this program's options dialog. Once you're done, you can run the brain, check Dream-Mode so that it can learn, and check the Twitter-Checkbox near the input textbox. The program will use it's NLP functions to fire the neurons from the tweets every 15 seconds (it does a twitter search for 'news').

Please also try Options | Update Wordcloud every 1 second with 1 minimum words to display, to see more action. Please set autofire-rate to OFF, because it may add too many random connections too quickly. But still check the autofire checkbox.

Some final assumptions

1. Assumption

This is a principle that can be found and that works everywhere in nature, from the smallest snail up to the human brain. So it cannot depend too much on a sophisticated neuron model. This model will differ in any species. You just have to understand and meet the right principles behind. THE SINGLE NEURON IS NOT INTELLIGENT.

2. Assumption

Computers can do it better. Biology has a wiring problem. All these axons need plenty of space. In computers, they are just memory addresses.

3. Assumption

Airplanes don't have feathers. When you build technology based on nature, you don't need to mimic the nature exactly. You only have to understand the principles behind. Technology can be better than nature. No bird can fly with mach 2.

4. Assumption

Hebb's Learning Rule is the key principle to get anything valuable out of spiking networks.

5. Assumption

It cannot work without damping by inhibiting neurons (or on synapse level).

Why damping is mandatory

Given are 6 neurons A, B, .. F. When you let them learn using the Hebbian learning rule, each neuron will get connected with each other after a while. That can't be the aim. Not, if we want to be able to distinguish between Pattern 1 and Pattern 2.

When we add 6 more neurons A', B', .. F' with negative output, and connect A with A', B with B' and so on, A' and B' can connect with C and so inhibit C with their negative output. Same way D' and E' can inhibit F. Thus the network can outline Pattern 1 from Pattern 2 and vice versa.

Experiment 3: Minimal sample test

Animals and Colors. Are they mixed up ?

- paste the above text into the NLP window and build the brain by hitting Run Book.

- go to Options and set Min. words to display = 2

- go to Network View

- check Dream-Mode

- set Auto-Fire rate to very high or even extremely high (because there are so few neurons)

- click on Run

- go to Wordcloud View

Will the brain mix up colors and animals, when it builds new synapses using the Hebbian Learning Rule ?

Remark: The program does not know the meaning of not.

What is Intelligence then ?

Intelligence has no generally accepted definition. It is often associated with cognitive skills, which in turn is related to information processing or "recognition", what means perception and which is leant closely on psychology. Unfortunately, we - even if we say that we now live in the information age - don't have even a common definition for the concept of information. Not even rudimental.

Since computer science without doubt is a branch of mathematics and has nothing to do with psychology, we want to take this opportunity and venture a new definition of intelligence from a mathematical perspective.

The key word for me is "distinction" or faculty of judgement. Distinction not only of physically visible things, but also of abstract concepts. Even the first varies from species to species. Unlike bees, we can not see in the ultraviolet range, or we can not perceive ultra-sonic sound how bats do. But think about teaching a bat the difference between structuralism and post-structuralism, which brings us to the concepts.

To express distinction mathematically, the "Laws of Form" by George Spencer-Brown can help a lot. Please forget the fantasies of "radical constructivism" *). You should better ignore The Frankfurt School and Gödel-Escher-Bach. And please do not mention Kant in my presence, especially if you were born after 1972. Because then you belong to the generation that has entirely misunderstood Kant and you may have most likely studied sociology. All this doesn't help us here at all and by the way, it is also complete nonsense. I hope I have made myself clear enough, just to avoid any misunderstandings.

The distinction (distinctio, διάκρισις, διορισμός) is a fundamental activity of thinking. It consists in the active determination or the disclosure of differences and otherness. It is a prerequisite of classification and insight.

Back to Spencer-Brown and his Laws of Form. Whose Symbolics and Algebra is the only known approach for me, to generally express system behaviour *) in a mathematical manner, and so it has a very special importance not only in the context of intelligence.

Perhaps the Algebra of Lof has failed to be recognized by a bigger audience so far, in our seemingly stalwart understanding of logic, in particular the Boolean Algebra and the logical calculus *). The Boolean algebra with its three operators "AND", "OR", "EXCLUSIVE OR" seems to be carved in stone and is so to speak the "holy trinity of computer science".

But how different would look the logical calculus and thereby also the algebra of LoF, if we allow a "neither nor" beside the others. What regards to distinction, anything that can be distinguished, is EITHER the one OR the other, or none of them (NEITHER NOR).

*) Unfortunately the english wikipedia is not very complete when it comes to some more advanced philosophical topics. Come on, english speaking community. You can do it better. There is still a lot to learn for you.

Is this still Research or is it Fine Art ?

When you look at the program associating words in full-screen mode, you may find it relaxing and inspiring.

When you're a gallery owner or a curator of an exibition, you might even say:

OMG, THIS IS THE PERFECT COMPUTER INSTALLATION FOR THE TWENTIETH-FIRST CENTURY !

You're welcome. Please don't forget to invite me for the vernissage then 😉

Features

- Native 64-bit on 64-bit systems (also runs on 32-bit systems)

- Full multi-core parallel calculations

Download "The Brain"

System Requirements

- MS-Windows Desktop - Vista to Windows 10

- .NET Framework 4.0 (already installed on most computers)

- 4 Gig RAM, Multi-Core System

Version 1.0.7, in english

Source Code

Full c# / VS 2013 sources are available upon request.

I need your confirmation, that this is used for educational and research purposes only.

Also listen to the soundtrack for this page on Soundcloud.

*) 'The Brain' is neither a product name nor a brand. It's just a working title for this research project and shouldn't be confused with products or brands from 3rd parties, that accidentally have a similar name.